Research

Following are high-level descriptions of some of the research problems I have been working on.

Multi-objective Prompt Optimization in Large Language Models (LLMs)

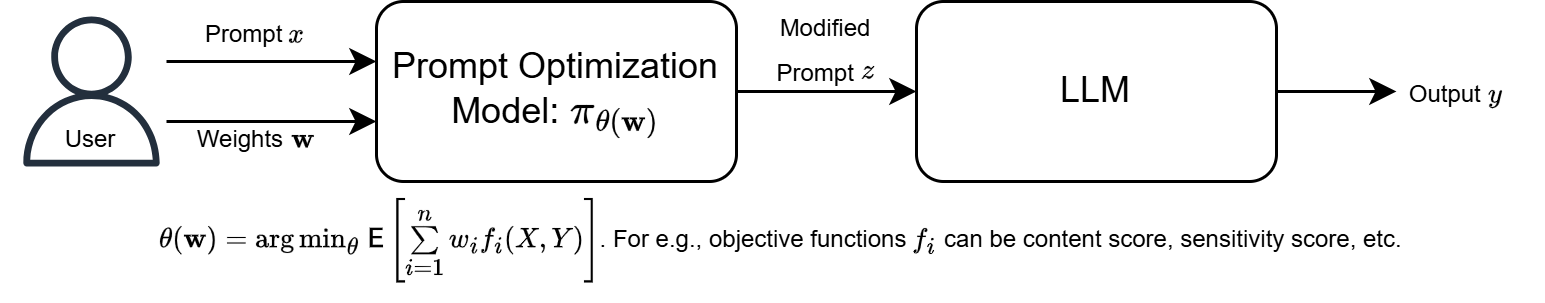

We explore prompt optimization, a powerful technique for maximizing the effectiveness of LLMs. Prompt optimization enables the design of tailored prompts that fully exploit

LLMs’ capabilities without the need for fine-tuning. We focus on the challenge of crafting prompts that optimize the

trade-offs among multiple objectives, thereby enhancing the efficiency and output of LLMs.

Existing methods primarily focus on maximizing performance with respect to single objective. Although recently

proposed methods for optimizing multiple objectives successfully generate prompts along the trade-off curve, they

struggle to target a specific point on the curve without requiring retraining of the prompt optimization model.

Taking inspiration from variable-rate deep compression techniques, we develop a novel method of designing

prompts for optimizing any given weighted sum of the objectives. This approach allows users to control the tradeoffs

between objectives by specifying the desired weights along with an initial prompt, which serves as input to the

prompt optimization model.

|

Distributed and Adaptive Feature Compression

A prevalent way in which machine learning models are trained involves collecting data from various relevant sources, and training the models on the aggregated data. However, in many applications, the input data is often collected from distributed sources at inference time. Examples include, the Internet of Things (IoT) networks, security systems with surveillance sensors, and driverless cars collecting data from sensors and receiving data from wireless receivers. In these applications, the volume of data is generally high and decisions are time-sensitive, and so it is important to have low latency. Moreover, when the data is being communicated through wireless channels, bit-rates can be quite low either for energy conservation purposes or because of poor channel conditions. Thus, it is imperative to optimize the data-stream pipelines in order to provide maximum information relevant to the performance of the downstream task. Moreover, in practice these pipelines are also subject to changes in bit-rates, and so it is necessary for the solutions to be adaptive to these changes. In this work, we try to answer the following question:

How to maximize information (relevant to the downstream task) received at a pretrained model at inference time when input data is collected in a distributed way through communication-constrained channels that are subject to change?

|

Robust Estimation

Robust mean estimation in high dimensions has received considerable interest recently, and has found applications in areas such as federated learning. The goal is to robustly estimate the true mean of a sample (drawn from an a distribution with unknown mean and bounded covariance matrix) which is corrupted by an adversary who has unlimited computational power, knows the estimator being used, and can corrupt upto \(\epsilon<1/2\) fraction of the sample. We provided an optimal outlier-fraction-agnostic estimator which achieves the information-theoretic limit. The following table shows comparison of theoretical guarantees with respect to other state-of-the-art works.

| Algorithm | Complexity | Error guarantee | Break-down point | Needs \(\epsilon\) |

| Tukey median | NP-hard | \(O(\sigma\sqrt{\epsilon})\) | \(\frac{1}{d+1}\) | No |

| Diakonikolas et. al. 2017 | \(\mathrm{poly(d,1/\epsilon)}\) | \(O(\sigma\sqrt{\epsilon})\) | NA | Yes |

| Cheng et. al. 2019 | \(\tilde{O}\left(\frac{nd}{\epsilon^6}\right)\) | \(O(\sigma\sqrt{\epsilon})\) | \(\frac{1}{3}\) | Yes |

| Dong et. al. 2019 | \(\tilde{O}(nd)\) | \(O(\sigma\sqrt{\epsilon})\) | NA | Yes |

| Zhu et. al. 2022 | \(\tilde{O}(n^2d)\) | \(O\left(\sigma\frac{\sqrt{\epsilon}}{1-2\epsilon}\right)\) | \(\frac{1}{2}\) | Yes |

| Feasibility problem 2022 | NA | \(O\left(\sigma\sqrt{\frac{\epsilon}{1-2\epsilon}}\right)\) | \(\frac{1}{2}\) | Yes |

| Opt. problems\(^*\) 2022 | NA | \(O\left(\sigma\sqrt{\frac{\epsilon}{1-2\epsilon}}\right)\) | \(\frac{1}{2}\) | No |

| Algo 1\(^*\) 2022 | \(\tilde{O}(nd)\) | \(O(\sigma\sqrt{\epsilon})\) | \(1-\frac{1}{\sqrt{2}}\approx 0.3\) | No |

| Algo 2\(^*\) 2023 | \(\tilde{O}(\max\{\epsilon n^2d, nd^2\})\) | \(O\left(\sigma\frac{\sqrt{\epsilon}}{1-2\epsilon}\right)\) | \(\frac{1}{2}\) | No |

\(^*\) refers to our contributions.

Identification problems in multi-armed bandits (MABs)

In the stochastic multi-armed bandit framework, the typical goal is to play the best arm as often as possible. An important variation of this problem is to find the best arm as quickly as possible under a error probability constraint. Other identification problems include odd arm identification, wherein the task is to find the odd arm, whose distribution is different from the other arms having same distribution. We formulated a framework of composite multi-hypothesis testing which not only encompassed the identification problems (e.g. top-\(k\) arms identification, odd-arm identification), but also general hypotheses cases involving distributions across all arms. We established an information-theoretic lower bound on expected stopping time under a false-alarm constraint, and provided an asymptotically optimal policy. A motivating example of the general framework is as given in the following figure.

|